Move data to Azure Archive Storage using PowerShell

Concept

You may have come across the term multi-tiered storage. This means that the storage solution has multiple arrays, fast and expensive and slow but cheap. Files accessed frequently are stored on very fast SSD disks, and files accessed less frequently are stored on the much cheaper but much slower spinning disks. Files designated for long-term archives are often stored on magnetic tapes. Most enterprise storage solutions can automatically handle the first two tiers, and some can offload backups to tapes on a schedule.

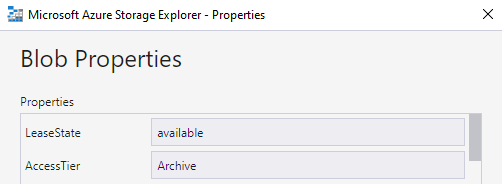

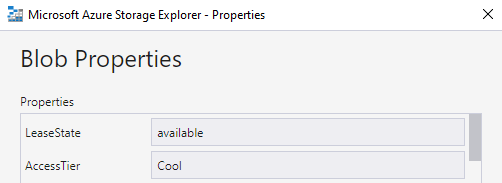

Azure offers similarly tiered storage: Hot, Cold and Archive. The Archive tier is very cheap (and yes, also very slow) storage designed for long-term retention. Azure Storage Account allows changing the tier for existing, individual files. The current tier can be seen in the Azure Storage Explorer:

We can move existing files in the storage account to the archive tier or upload files as we usually would to an existing account and then mark them for archiving. This process is entirely manual but can be easily automated with the help of PowerShell.

Microsoft does not reveal how the archive storage works and what exactly happens behind the scenes, whether files are physically moved to tapes or just coped to slower and bigger storage arrays. The speed of retrieval operations would suggest a mechanical operations are involved i.e. tapes. If you know the internals of the Archive Storage, please share it in comments.

The archiving process can take a few minutes to a few hours, depending on the file size and how busy the archive storage is.

As of January 2020, according to the official Azure pricing, the archive storage is 90% cheaper than the COOL tier, 95% cheaper than the HOT tier and about 99.4 % cheaper than the PREMIUM storage.

| PREMIUM | HOT | COOL | ARCHIVE | |

|---|---|---|---|---|

| First 50 terabyte (TB) / month | $0.15 per GB | $0.0184 per GB | $0.01 per GB | $0.00099 per GB |

| Next 450 TB / Month | $0.15 per GB | $0.0177 per GB | $0.01 per GB | $0.00099 per GB |

| Over 500 TB / Month | $0.15 per GB | $0.0170 per GB | $0.01 per GB | $0.00099 per GB |

When should I use the Archive Tier?

Remember! The Archive tier is slow. It can take several hours to bring the files back before you can use them.

Do not use Archive Storage for operational (most recent) backups, as you may not be able to retrieve the backup on time. Ensure you comply with your Recovery Time Objective (RTO) requirements.

Regulatory requirements

If your company deals with finance data, you will likely be audited at some point in your career and may be familiar with regulatory compliance. Whether it is SoX or any other requirements, you may be required to store a snapshot of the financial data at the end of the financial year for several years. Any future need for historical data will likely not be urgent and should have enough.

Access Audits

Access auditing is another regulatory requirement that may not require frequent access but must be kept for a long time – just in case. Therefore, we should be able to offload either event logs or the SQL Server ERRORLOG to Azure Blob Storage.

Data Migration Projects

Quite often, after the data migration project has concluded, we may want to archive the old data or the entire system for future reference or, again, regulatory requirements.

Risks of the long-term storage

We do not need backups. We need restores. To ensure we can restore from a backup, we should test it periodically, as storage can become corrupted over time. Although this is a minimal risk, especially with Azure Storage, as the Microsoft folks ensure the storage and data integrity are super-resilient, corruption is still possible, especially as the data is being transferred to remote storage.

Ok, show me how to automate it

Everyone will have their reason for using Azure Archive Storage so let’s focus on how we can “push” files to the slow and cheap tier.

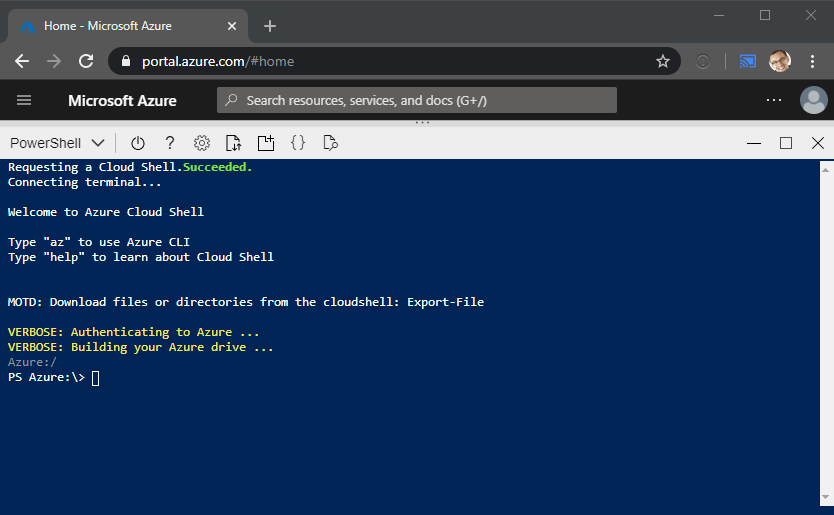

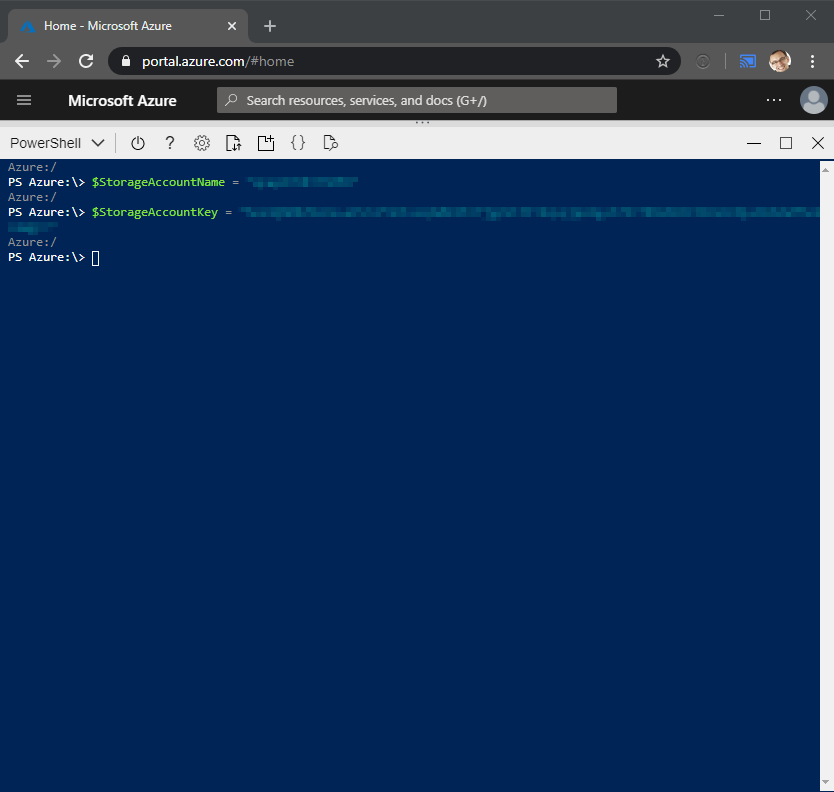

First of all, login to your Azure Portal and run PowerShell CloudShell console:

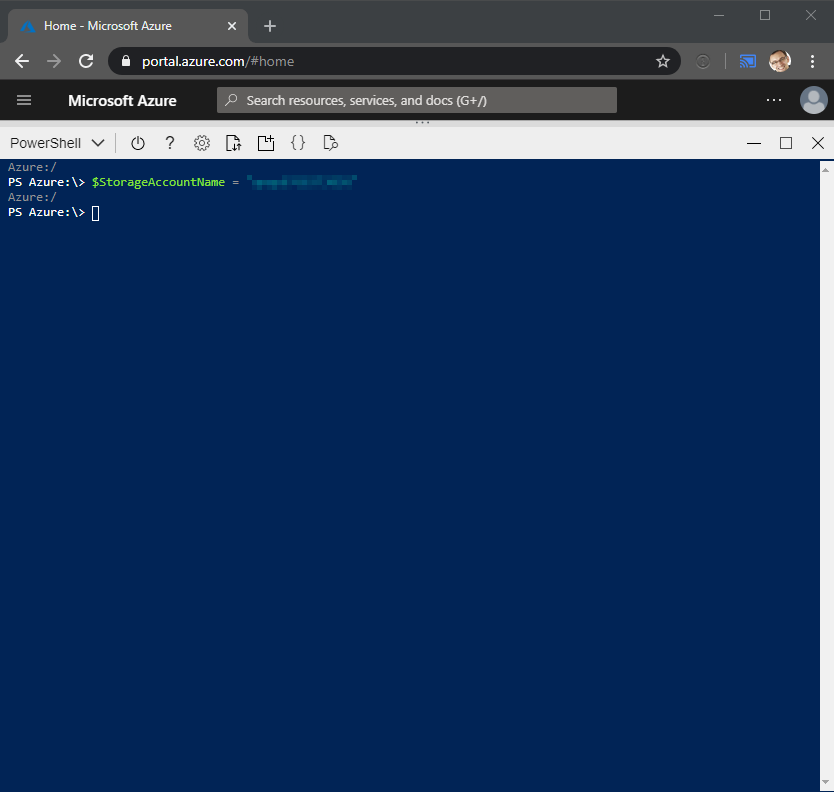

Once connected, execute the below script in the Storage Account of your choice. You will need the Storage Account Name and the KEY:

<# exclude metadata file and only process blobs that are NOT already in the archive tier

THIS MUST BE RUN FROM AZURE POWERSHELL CONSOLE #>

$StorageAccountName = "YOURSTORAGEACCOUNT"

$StorageAccountKey = "YOURSTORAGEACCOUNTKEY"

New-AzureStorageContext `

-StorageAccountName $StorageAccountName `

-StorageAccountKey $StorageAccountKey `

| Get-AzureStorageBlob -Container "archive" `

| ?{ $_.Name -NotLike "*metadata.tar.gz*" } `

| ?{ $_.ICloudBlob.Properties.StandardBlobTier -ne "Archive" } `

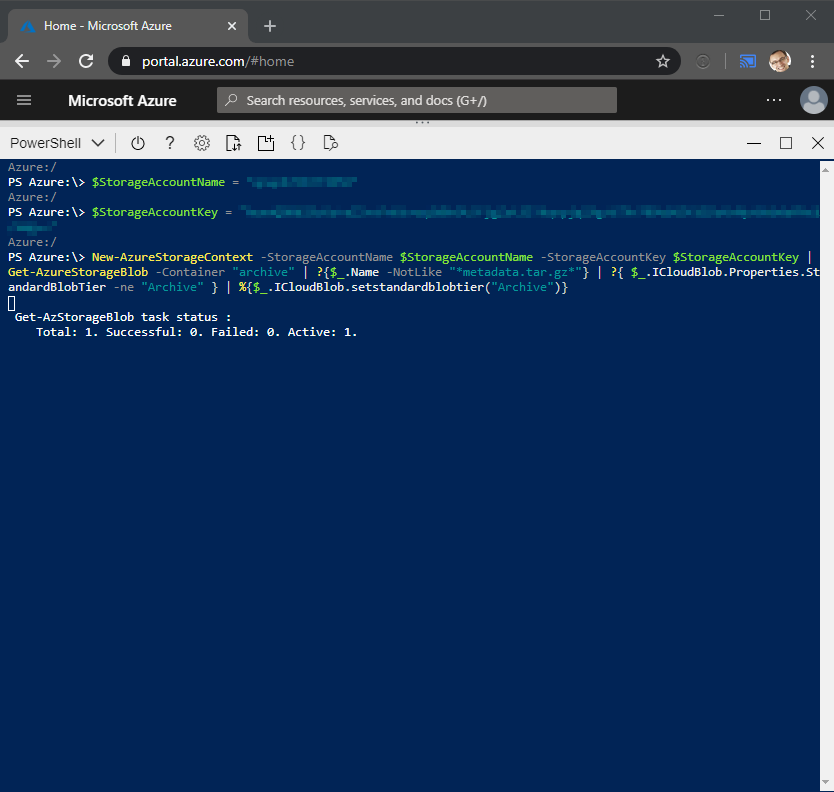

| %{ $_.ICloudBlob.setstandardblobtier("Archive") } First, set the $StorageAccountName:

Secondly, set the $StorageAccountKey :

Lastly, run the archive process. In this example, we are archiving all files in the “archive” container that are not already in the archive tier, excluding files ending with *metadata.tar.gz

This approach will allow us to set a scheduled runbook to archive any new files in the container.

Conclusion

Archive Storage, whether Azure or the AWS equivalent – Glacier is great for long-term storage. However, we must ensure the dehydration process will be fast enough to comply with our RTO policies. In most cases, it may not be good enough for operational backups where we may have a few minutes to a couple of hours to restore the database from the backup.

A good use case for Archive Storage is regulatory requirements where we have to keep some data for audit purposes and only retrieve it on a specific request where timing does not matter, but costs do.